In the early hours of a somber morning nearly 30 years ago, the town of Dunblane, Scotland, was scarred forever by an atrocity that claimed the lives of 16 children and their teacher. The massacre, as horrific as it was, catalyzed a national movement that led to sweeping gun control reforms in the UK. It was a collective moment of unity and decisive action. Yet, today, as the UK grapple with the shocking violence in Southport, we find ourselves in a vastly different reality, one where unity is a distant memory and divisiveness is the new norm.

The massacre in Southport, which shattered the peace of a local dance class, has unleashed a torrent of social unrest across Britain. This is not the mobilized, purpose-driven outcry we saw in Dunblane’s aftermath but a chaotic, frenzied reaction fueled by misinformation and exacerbated by the very platforms we use to connect with each other. The contrast is stark and disturbing, pointing to a fundamental shift in our societal fabric.

There’s always been violence. What’s brought violence mainstream is social media

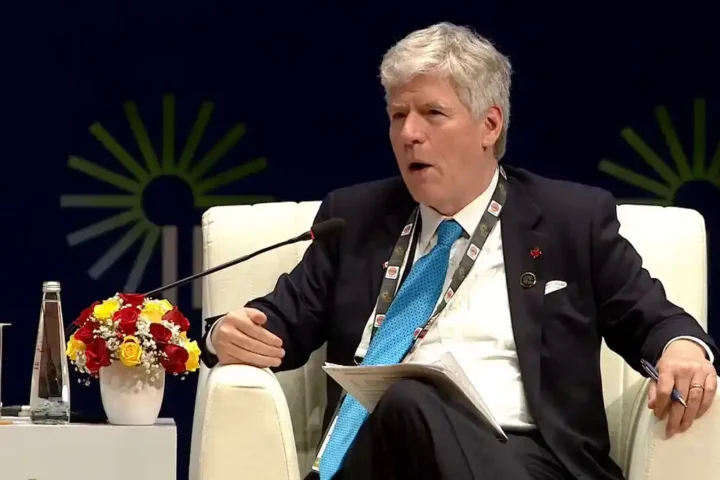

Maria Ressa

Maria Ressa, the Filipino journalist and Nobel laureate, captures this transformation succinctly: “We’ve always had radicalization, but in the past, leaders would be the bridge and bring people together. That’s impossible to do now, because what used to radicalize extremists and terrorists is now radicalizing the public.” This insight hits at the heart of the issue. The digital age has given birth to an algorithmic outrage machine that thrives on polarisation and sensationalism.

In 1996, the grief and anger of the British public were channeled into a constructive campaign that resulted in stringent gun laws. Today, the same emotions are being hijacked by social media algorithms designed to amplify the most extreme voices. The result is a society increasingly divided, where misinformation spreads like wildfire, and collective action is supplanted by chaos and confusion.

Politicians now say things they would not have said previously and flirt with conspiracy myths that used to belong to fringe movements

Julia Ebner

Julia Ebner, leader of the Violent Extremism Lab at Oxford University, highlights how this “alternative information ecosystem” has paved the way for far-right ideologies to gain traction. Platforms like Telegram, Parler, and Gab operate beneath the radar of mainstream media, creating echo chambers that foster conspiracy theories and extremism. The recent unrest in Southport is not an isolated incident but part of a global trend where digital misinformation ignites real-world violence.

This phenomenon was starkly evident in the United States on January 6, 2021, when social media-fueled insurrectionists stormed the Capitol. In Southport, we are witnessing a similar pattern, where wild rumors and anti-immigrant rhetoric have escalated into street violence. As Jacob Davey from the Institute for Strategic Dialogue notes, this is “a perfect storm” exacerbated by the re-platforming of far-right figures and the rollback of measures to combat online hate.

The tragic events in Southport underscore a pressing need for comprehensive regulation of social media. Despite growing awareness among academics, researchers, and policymakers, tangible actions remain woefully inadequate. As Ressa warns, unchecked disinformation can have a cumulative, corrosive effect on society, akin to an addiction that fundamentally alters our collective psyche.

The UK authorities, while recognizing the threat of far-right extremism, have yet to address the root technological issues. Investigations into the weaponization of social media by foreign actors have been met with skepticism and resistance, particularly from right-wing media outlets. This week’s revelation about a suspected Russian account spreading false information on X is a stark reminder of the broader, global dimensions of this challenge.

Ebner draws parallels with the rise of far-right movements in other countries, noting how these movements exploit algorithmically powerful emotions like anger and fear. There is a disturbing sense of collective learning among far-right groups across borders, facilitated by these alternative information ecosystems.

As the UK stands at a crossroads, the question looms large: what will Keir Starmer do? Politicians themselves are not immune to radicalization, often employing dog whistles and conspiratorial rhetoric. Proposed solutions, such as increased facial recognition, risk creating further technological harms without addressing the underlying issues.

Ravi Naik of the law firm AWO advocates for a proactive approach, including stricter enforcement by the Information Commissioner’s Office and more decisive police action against incitement. However, these measures alone are insufficient. The scale and complexity of the problem demand a nuanced, long-term strategy and a mature conversation about the future of digital technology and its role in our society.

The Southport tragedy should serve as a wake-up call. We must move beyond reactive, piecemeal measures and confront the deeper structural issues driving our digital dystopia. Only then can we hope to reclaim a semblance of the unity and purpose that once defined our collective response to tragedy.