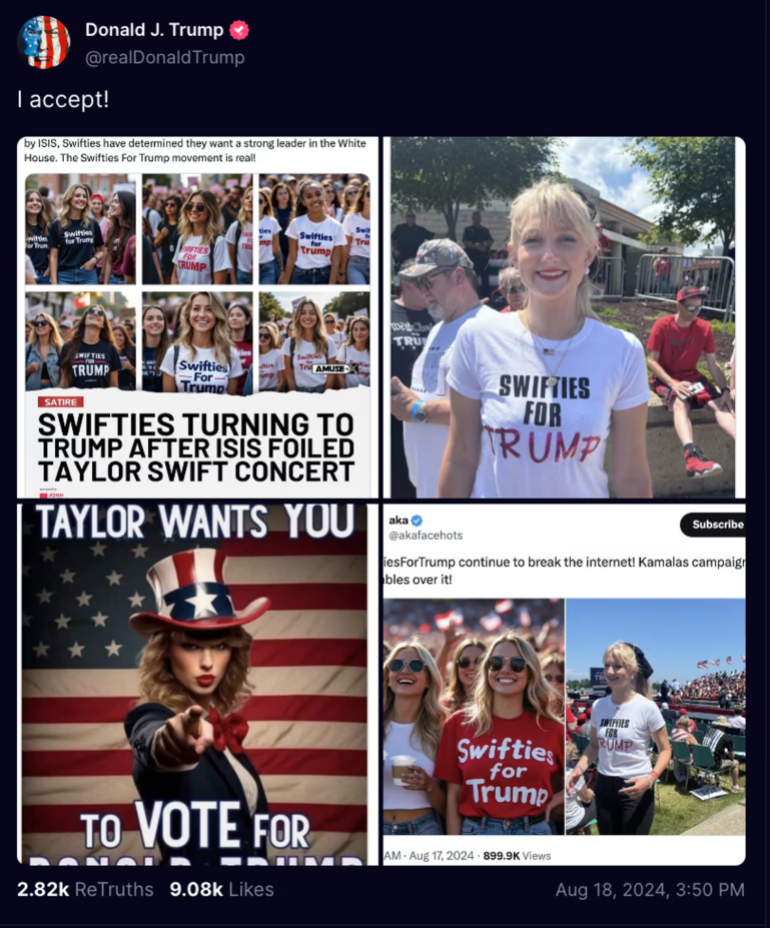

As the US inches closer to the 2024 election, a new kind of chaos is unfolding—one that transcends the traditional political fray. We’re witnessing the dawn of an AI-driven disinformation crisis, where the line between reality and fabrication blurs with alarming ease. The latest flashpoints? A false claim by Donald Trump that photos of large crowds at a Kamala Harris rally were AI-generated and doctored images implying Taylor Swift’s endorsement of Trump making the rounds on Truth Social. These incidents are not isolated—they’re part of a broader, more troubling trend.

Just days ago, we learned that an Iranian group allegedly used OpenAI’s ChatGPT to create divisive content aimed at influencing the U.S. election. Meanwhile, Elon Musk’s Grok AI model, a new addition to the X platform, has already been caught spreading misinformation about voting. The scale and sophistication of these AI-generated falsehoods are unprecedented, and they bring to life the very warnings that experts have been issuing for years.

Nathan Lambert, a machine learning researcher at the Allen Institute for AI, predicted this mess back in December when he called the 2024 election a “hot mess.” He was right. As Kamala Harris accepted the Democratic nomination, I found myself double-checking whether the crowds cheering her on were real or the result of some AI trickery. And it’s not just me—social media is awash with speculation about the authenticity of images from both Democratic and Republican events. Are we really at the point where we can’t trust our own eyes?

The rise of AI-generated disinformation marks an insidious shift in our political landscape. In many cases, these AI fakes aren’t even intended to deceive. Instead, they’re designed to provoke outrage, sow doubt, or simply serve as meme fodder that reinforces the biases of a candidate’s base. Yet, whether they’re meant to trick or just to taunt, the end result is the same: a growing sense of mass self-doubt about what is real and what isn’t.

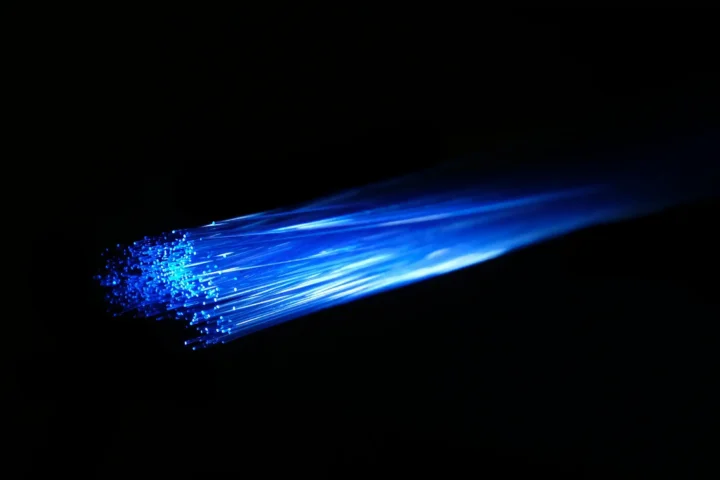

What’s even more frightening is that this could be just the beginning. We’ve already seen the emergence of Deep-Fake-Cam, a tool that can create live, high-quality deepfake videos in real time. Imagine a scenario where, with a single image, someone could convincingly impersonate a public figure like Elon Musk, broadcasting messages that seem real but are entirely fabricated. This technology, combined with AI-generated voice clones, could take disinformation to a whole new level—one where it’s nearly impossible to tell fact from fiction in real-time video.

As if that weren’t bad enough, the platforms where these AI-generated media are shared—social media giants like X (formerly Twitter), Facebook, and Instagram—are ill-equipped to handle the surge of deepfakes and disinformation. Election integrity teams have been sharply downsized, leaving a vacuum that bad actors are all too eager to fill. If this is where we are in August, what will December look like?

With federal AI regulation stalled until after the election, the US voters are left in a precarious position. The safeguards that could have helped navigate this new era of AI-driven disinformation are nowhere in sight, leaving us to weather the storm on our own. It’s a nightmare scenario, one where the truth is constantly under siege, and the very foundation of our democracy—an informed electorate—is at risk.

So, as the US hurtles toward Election Day, the question isn’t just about who will win or lose. It’s about whether we can even trust what we see, hear, and read. In this AI election nightmare, that’s the most terrifying uncertainty of all.